Before the world fell over and caught fire, I went to PyCon for the first time. Like all work "conferences" it was pretty nice - all expenses paid, saw some interesting talks and got to meet a bunch of new people.

One set of talks I was particularly interested in were the talks about building your first Python package and package maintenance in general - I had a few projects I had done that I thought others might find useful, but at the time they were bare files in GitHub repos. The main talk that inspired me to get off my ass and stop letting my dreams be memes was by Hynek Schlawack, which was also the first time I'd heard about what happened/was likely to happen to Travis and planted the seed in my mind to move my CI to somewhere else sooner than later. Of course, sooner turned into later and while wallowing in beer flu funemployment I decided to finally update the CI pipeline for my projects, and maybe throw some CD in while I'm at it; I'd heard good things about Azure Pipelines so I was leaning towards it while looking for a new CI provider, but after finding Hynek's article on GitHub Actions I couldn't be bothered to create a new account somewhere else. A couple days later and I had my first repo fully migrated to GHA - a couple days I hope anyone reading this post will be able to save.

A few things before getting into this:

- The package has to already be prepared for upload to package repositories

- You have to have an account on PyPI (and it is highly suggested you have an account on TestPyPI) before setting this up

- The package has to already exist on PyPI/TestPyPI (so upload a 1.0.0 to PyPI if you have one available, and the latest prerelease to TestPyPI)

- The package has to use setuptools_scm to handle versioning

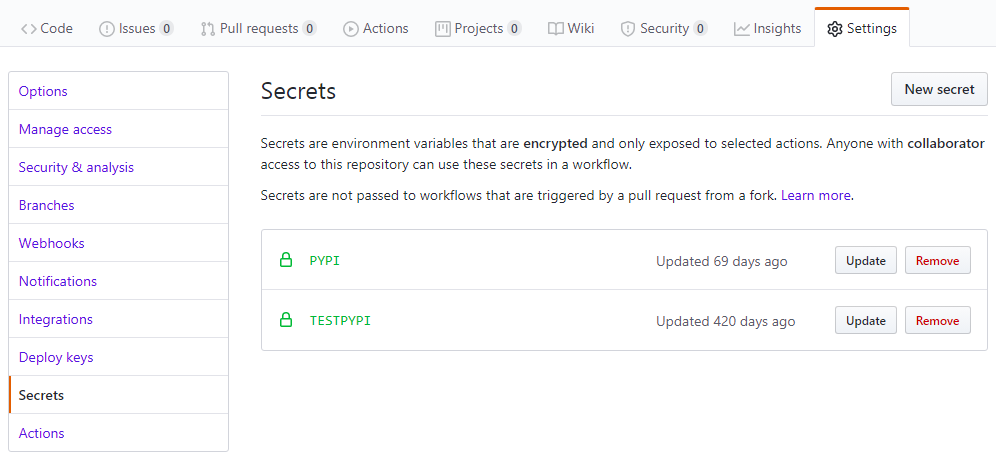

The reason the package must already exist on the package repositories is that this is the only way to get an API token scoped to that package instead of your entire account. Start by getting an API token for the package on both PyPI and TestPyPI, then add them as secrets to the corresponding repository.

That should be all the necessary prepwork - without further ado, let's dive in:

name: Pipeline

on:

push:

branches: [master]

pull_request:

branches: [master]

This is a pretty basic preamble - I named my CI/CD pipeline "Pipeline" because I don't have much imagination and calling it "CI/CD" didn't work with my status badges; my pipeline is set to kick off when I open PRs against master or when I push to master.

jobs:

test:

name: Python ${{ matrix.python-version }} on ${{ matrix.os }}

runs-on: ${{ matrix.os }}

strategy:

fail-fast: false

matrix:

python-version: [3.6, 3.7, 3.8, pypy3]

os: [ubuntu-latest, windows-latest]

env:

OS: ${{ matrix.os }}

PYTHON: ${{ matrix.python-version }}

This is the start of the test job; unsurprisingly, this job is meant to run tests against the desired versions. name sets the name of the individual job runs (really only useful when using a job matrix to test against multiple Python versions) Setting fail-fast to false means all your queued or currently-executing runs for the given job won't be ended prematurely if one ends in failure, this is useful to determine if a failure is limited to a single interpreter/OS combo or if it's more widespread. I typically target every version of CPython feature-compatible with the most recent Pypy release along with Pypy itself, so currently that's 3.6, 3.7, 3.8 and Pypy which targets 3.6. I originally only tested against a UNIX OS on Travis since all my packages are pure Python, but since GHA is built on Azure Pipelines and makes Windows testing easy I might as well throw that in too. The env section is currently not useful and you can skip when copying this workflow.

steps:

- uses: actions/checkout@v2

- uses: actions/setup-python@v2

with:

python-version: ${{ matrix.python-version }}

- name: Install test dependencies

run: pip install .[test]

- name: Run tests

run: make ci-test

- name: Upload coverage to Codecov

uses: codecov/codecov-action@v1

with:

# env_vars: PYTHON,OS

fail_ci_if_error: true

This is the actual meat of this job, which checks out the code, sets up Python with the interpreter version specified in the above matrix, installs all necessary dependencies for the package plus the extra dependencies for testing, runs the tests and uploads code coverage statistics. If you don't have test-specific dependencies you could use pip install . instead. I use Makefiles so I don't have to remember how to run tests and it makes migrating CI services much easier (I can't think of a CI provider who doesn't let me make), but if you want to skip it and put your commands right in the YAML file, go ahead. I use Codecov to manage my code coverage statistics, but you can tell GHA isn't a flagship integration since they don't differentiate individual build artifacts like they do with Travis CI.

Supposedly you used to be able to enclude environment variables when sending reports, but that functionality has been removed in the version I'm using.

Now onto the next job:

release:

name: Publish Release

needs: test

runs-on: ubuntu-latest

This is the start of the release job, it publishes source dists and wheels automatically so you don't have to. This job needs the test job to have completed successfully in order to run, which makes sense as you don't want to publish a package that didn't pass tests. I would prefer this job to be in its own file, but current GHA limitations mean you can only need jobs that are defined in the same file. Since all my packages are pure Python I can make a single universal package; I choose to do it using Python 3.6 on Ubuntu.

steps:

- uses: actions/checkout@v2

- uses: actions/setup-python@v2

with:

python-version: 3.6

- name: Fetch tag history

run: git fetch --prune --unshallow --tags

- name: Prepare build dependencies

run: pip install -U pep517 twine setuptools

- name: Build distributions

run: make build

- name: Get release version

run: |

echo "::set-env name=version::$(python setup.py --version)"

echo release version is $(python setup.py --version)

- name: Check distributions

run: twine check dist/*

Like in the previous job the code is first checked out and a Python 3.6 interpreter is initialized. The tag history is fetched since they are not fetched during checkout and setuptools_scm uses tags to determine the version of the package being built. My packages use pep517 to build but it is not installed by default, so I install it manually alongside twine. Also the default version of setuptools as of 6/15/20 is too old to use in a PEP 517 workflow, so I update it. The release version is saved to a variable to make it easier to parse later in the job.

- name: Publish TestPyPI distributions

if: >-

github.event_name == 'push'

&& (contains(env.version, 'rc')

|| contains(env.version, 'dev'))

uses: pypa/gh-action-pypi-publish@master

with:

user: __token__

password: ${{ secrets.TESTPYPI }}

repository_url: https://test.pypi.org/legacy/

- name: Publish PyPI distributions

if: >-

github.event_name == 'push'

&& !contains(env.version, 'rc')

&& !contains(env.version, 'dev')

uses: pypa/gh-action-pypi-publish@master

with:

user: __token__

password: ${{ secrets.PYPI }}

This is the heart of the job, these steps are mutually exclusive and with the default settings for setuptools_scm packages will be uploaded to TestPyPI by default. Both steps only fire on pushes so pull requests don't accidentally publish packages. These steps parse the previously-defined version variable: if the version contains the string "dev" (as setuptools_scm appends to version strings by default) or "rc" the release is published to TestPyPI, otherwise it is published to PyPI.

And that's pretty much it - you can push commits as normal and they'll be sent to TestPyPI for people to try if they wish; but when you're ready you can tag a commit with a new version, push both and have the official release on PyPI proper. I didn't add other intermediate steps like linting/checking docs because I don't have those things, but this is a solid base to add such things yourself.